|

Research Interests

Affective Computing, Cognitive Modeling,

Human-Computer Interaction, Virtual Humans, Persuasive Technology

My research is directed toward developing

human-like software agents for virtual training environments and to use

these computational methods to concretize psychological theories of human

behavior. Specifically, I investigate how algorithms can control the

behavior of characters in virtual worlds, endowing them with an ability to

think and engage in socio-emotional interactions with human users, using both

verbal and nonverbal communication. Such methods can deepen our

understanding of human behavior, by instantiating and systematically

manipulating psychological theories. They also have wide application to

such areas as training, entertainment and clinical diagnosis, assessment

and treatment.

|

|

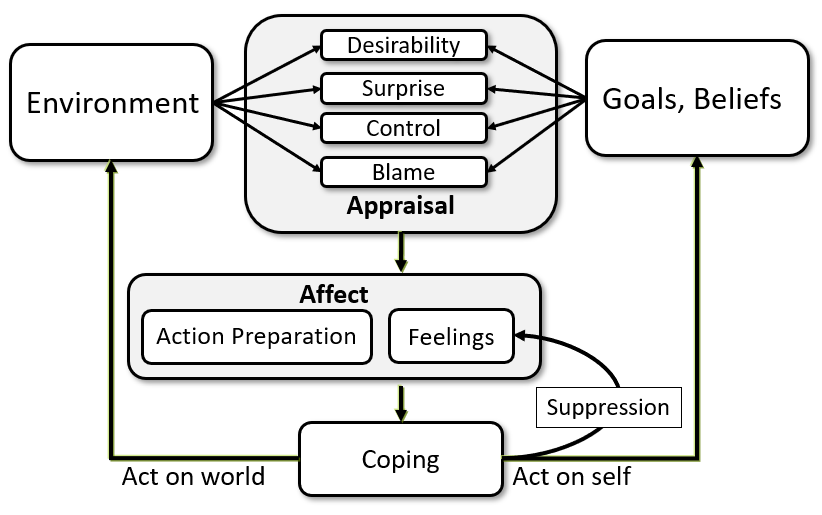

Emotion Modeling

Emotions have a

pervasive impact over our lives. They shape how we perceive the world,

how we make decisions, and play a key role in social communication. In

the context of virtual training environments, emotions also play a key

role in the believability of the simulation and the extent to which a

student will feel immersed in the experience. Realizing psychological

theories as working computational models advances science by forcing

concreteness, revealing hidden assumptions, and creating dynamic

artifacts that can be subject to empirical study. These two videos give a high-level

overview of our emotion modeling research: Sweet

Emotion, CACM

|

|

|

|

|

Virtual

Humans

The Virtual Human

Project brings together research in intelligent tutoring, natural

language recognition and generation, interactive narrative, emotional

modeling, and immersive graphics and audio. The focus is on creating a

highly realistic and compelling training environment. The system includes

an 8'x30' wrap-around screen, 10.2 channels of immersive audio, and

interactive synthetic humans that can interact with the trainee and

respond emotionally to their decisions. Applications of this technology

include the Mission Rehearsal Exercise [MRE Movie Clip] and the

Stability and Support Operations [SASO Movie Clip]

training prototypes.

|

|

|

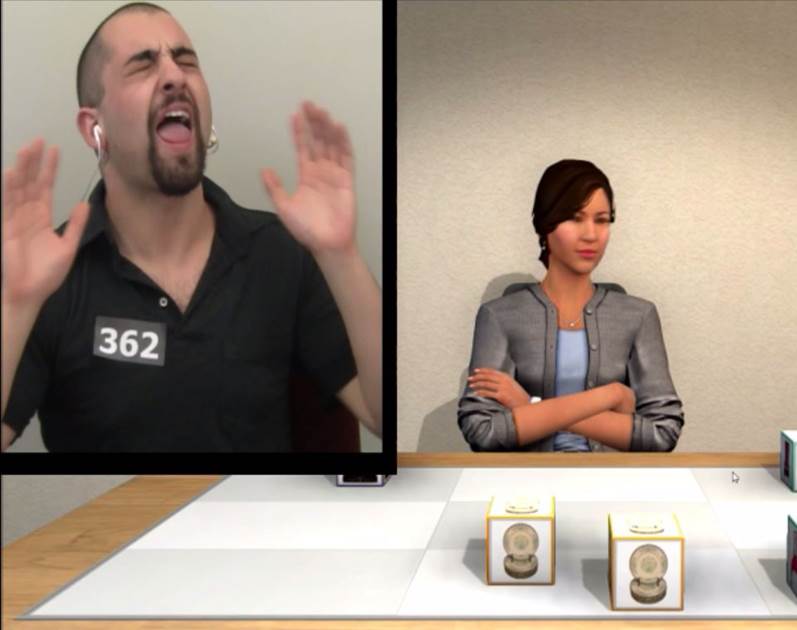

Social Emotions and Rapport

When

people interact their speech prosody, gesture, gaze, posture, and facial

expression contribute to establishment of a sense of rapport. Rapport is argued to underlie success in

negotiations, psychotherapeutic effectiveness, classroom performance and

even susceptibility to hypnosis.

The rapport project uses machine vision and prosody analysis to

create virtual humans that can detect and respond in real-time to human

gestures, facial expressions and emotional cues and create a sense of

rapport. These techniques have a demonstrable beneficial impact on human

interaction. [Rapport Movie Clip]

|

|

|

|

Anthropomorphism in Human-Machine

Interaction

There is growing interest in endowing machines with more

human-like characteristics, fueled by the assumption that this will

enhance the effectiveness of human-machine interactions. Research has

demonstrated that machines can be made more human-like, but less research

has considered if this benefits or harms human-machine team performance.

Indeed, a review of the literature illustrates that human-like qualities

can results in unintended and disruptive consequences. Sometimes, highlighting the "computerness" of virtual agents has important benefits (e.g., see this

example).

|

|

|

|